Data analysis and validation in biomedical engineering

Table of contents

Modern biomedical engineering increasingly relies on integrated measurement and interpretation chains, in which sensors, acquisition channels, processing algorithms, and image reconstruction algorithms are integrated into a single system. Clinical practice is shifting toward integrated measurement and interpretation chains, in which sensors, acquisition paths, processing algorithms, imaging techniques, and the organizational and regulatory frameworks of clinical engineering form a continuous flow of information. This article explains how sensors for medical applications are designed, how reliable biological signal acquisition chains are built, how images and signals are converted into clinical information, and why technology management and standardization in the hospital environment determine the safety and effectiveness of therapy.

Sensors

Biomedical sensors convert mechanical, electrical, optical, or chemical quantities into an electrical form compatible with modern measuring equipment. Their common feature is transduction, i.e., the conversion of one form of energy into another, and the designer’s task is to minimize distortion and interference at the interface between physiology and electronics. Biomedical sensing can be divided into physical sensing (e.g., pressure, flow, temperature), electrochemical (e.g., pH, pO₂, pCO₂, glucose), optical (e.g., pulse oximetry, immunosensors), and biopotential electrodes for EEG, ECG, or EMG signals. These classes form the basis for the chapters on sensors in the textbook, which emphasize that an efficient information chain begins with the correct interaction of the sensor with tissue and body fluids.

Biopotential electrodes, used to record bioelectrical activity, perform transduction at the metal–electrolyte interface. At this interface, a half-cell potential is observed, which depends on the electrode material and the solution’s composition. The stability and reversibility of redox reactions determine noise, drift, and artifacts, making the selection of materials (e.g., Ag/AgCl as reference electrodes) and control of the ionic environment critical for the reliability of measuring slow-changing and low-frequency biological signals. These phenomena are described in the electrochemical literature and summarized in sensing compendia; however, clinical practice requires translating them into specific design requirements: low contact impedance, polarization minimization, stable references, and repeatable geometry and pressure.

In the field of electrochemical sensors, electrode blood gas sensors and enzymatic sensors (e.g., glucose) play a special role, where the stability and reproducibility of reference electrodes (Ag/AgCl, less often Ag/AgBr) are prerequisites for reliable voltammetry and potentiometry. The proper selection of electrolyte composition and control of aging processes limit the drift of the reference system, which in turn affects the precision of in vivo and in vitro calibration.

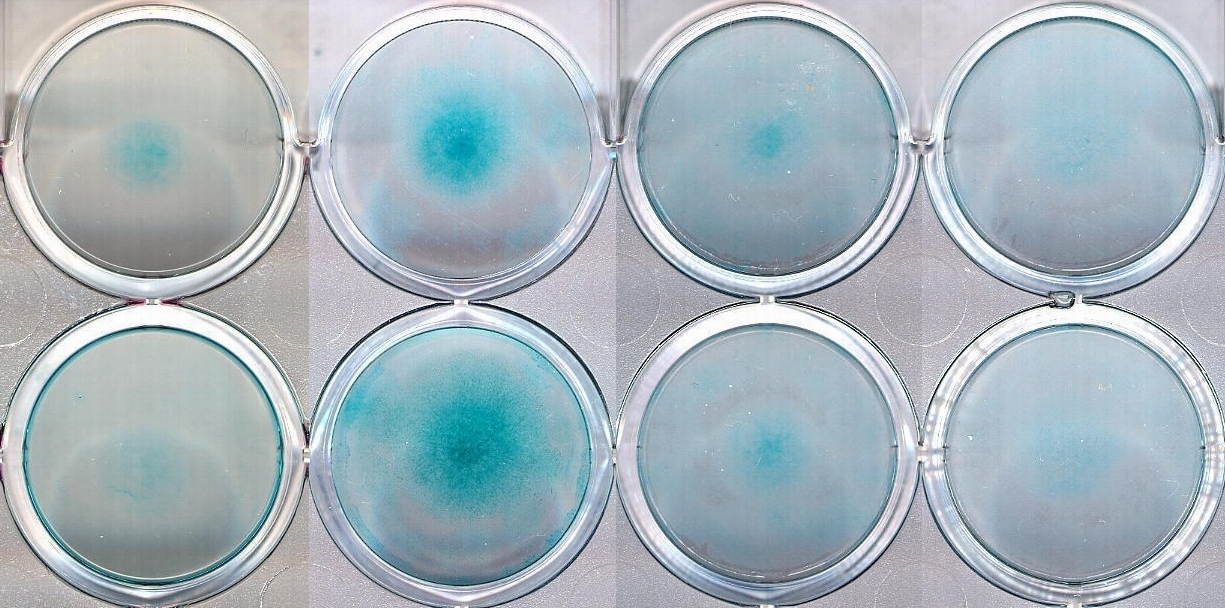

Optical sensors — both fiber optic and planar waveguide-based — use radiation modulation by a sample or indicator. In practice, there are three basic schemes: direct influence of the analyte on the properties of the waveguide (e.g., refractometry using an evaporative wave or plasmon resonance), remote transport of light to the sample and back (in situ spectrophotometry), and the use of an indicator in a polymer matrix at the front of the optical fiber. These architectures enable the construction of oximeters, gas sensors, glucose sensors, and immunosensors—increasingly with a focus on continuous monitoring, including in outpatient settings.

From the perspective of medical metrology, all these sensor families share a common challenge: designing an interface that is both biocompatible and electrically/optically/chemically stable. The designer must simultaneously consider tissue contact, sterilizability, resistance to environmental interference, ergonomics of use, and the limitations of analog tracks in medical devices. Therefore, the chapters on sensors introduce not only transducer classes, but also the logic of selecting light sources, optical elements, detectors, and signal paths depending on the required resolution, response time, and signal-to-noise ratio.

Acquisition, compression, and analysis

The second link in the information chain involves signal acquisition, conditioning, and analysis. Biological signals are inherently non-stationary, low-signal, and susceptible to interference, so knowledge of their origin and spectral characteristics determines the choice of processing methods. In terms of the textbook, the logic is clear: from the classification of biosignals and the basics of frequency analysis, through acquisition and filtering techniques, to specific tools describing the time-frequency dynamics, nonlinearity, and complexity of biological systems.

In clinical practice, solutions that combine adaptive filtering and spectrum estimation methods with time-frequency representations are prevalent. The short-time Fourier transform (STFT) enables events to be localized in both time and frequency; however, its resolution is limited by the uncertainty principle. Where it is justified to search for nonlinearity, higher-order analysis (bispectrum, trispectrum) enables the distinction between signals generated by nonlinear processes and those generated by linear ones, which is crucial when assessing the interaction of biological rhythms. These tools are highlighted as a modern toolbox for biomedical engineers.

The growing role of telemetry and long-term monitoring means that data compression is no longer an option, but a necessity. Domain-specific (DCT, FFT), multiresolution (wavelet, subband), and hybrid algorithms for multichannel signals, such as ECG, are designed to preserve clinical relevance while minimizing bandwidth and memory requirements. It is worth noting that the exact mechanisms that facilitate compression also serve to detect events and extract features in real-time streams.

Classic methods are complemented by tools inspired by complexity theory and machine learning. Neural networks in the analysis of sensorimotor, cardiac, and neurological signals introduce nonlinear mappings that can handle high-dimensional, noisy feature vectors. In turn, fractal and scaling measures describe the roughness of physiological dynamics, which helps characterize neurodegenerative diseases or sleep disorders. This set of methods does not replace physiological modeling, but creates a computational layer that increases the sensitivity and specificity of clinical classifiers.

It is worth emphasizing that the effectiveness of processing algorithms depends on the acquisition conditions. Motion resistance, contact impedance stability, appropriate selection of A/C converter dynamics and anti-aliasing filters, and galvanic separation of tracks from the patient are boundary conditions. Neglecting them results in a systematic error greater than the gain from the most sophisticated analysis. This aspect, which integrates sensor and acquisition circuit design, is discussed in the section of the manual devoted to medical instruments, particularly biopotential amplifiers and non-invasive methods for measuring cardiovascular parameters.

Imaging

The third pillar of the information chain is imaging. The spectrum of techniques covered in the textbook — from classic X-ray diagnostics and angiography, through computed tomography (CT), magnetic resonance imaging (MRI), nuclear medicine (SPECT, PET), to ultrasound and impedance tomography — creates a multimodal platform where each technique provides a different projection of the patient’s condition. The logic of integration involves combining spatial resolution, tissue contrast, and functional sensitivity to address the specific clinical question.

The choice of modality is a decision made by both engineers and clinicians. If the question concerns bone architecture and mineralization, X-ray and CT techniques offer advantages. When soft tissue contrast, spectroscopy, and functionality are important, MRI with fMRI variants and chemical shift imaging are used. Radiopharmaceuticals and gamma detection (SPECT, PET) are used to assess perfusion and metabolism. Ultrasound, thanks to piezoelectric transducers, enables the combination of morphological imaging with hemodynamic measurements (Doppler). Data integration is sometimes achieved through hardware (PET/CT hybrids) or software (image registration, fusion, and parametric mapping). The textbook organizes these techniques in a modular way, emphasizing that the reconstruction layer and image processing are as important as the equipment itself.

It is worth noting that ultrasound flow measurement, in which Doppler algorithms must take into account the insonation angle, velocity aliasing, and sampling characteristics, is a key consideration. This problem illustrates the general law of the information chain: the specificity of the physical imaging mechanism determines the limitations of processing and interpretation. A similar relationship applies to tomographic reconstructions—the choice of algorithm (e.g., FBP vs. iterative methods) affects the noise-to-detail ratio, so system operating parameters cannot be considered in isolation from the clinical objective.

Technology management and standards

Even the best-designed sensor and the most sophisticated algorithm will not translate into clinical value without the appropriate organizational infrastructure in place. This is where clinical engineering comes in — a discipline that has been developing since the 1960s and 1970s in response to the increasing complexity of hospital technologies and the need for systematic risk management. Such an engineer is a specialist involved in the chain of hospital processes, from technology assessment through investment planning and equipment management to the creation of quality indicators, safety audits, and compliance with standards.

The evolution of clinical engineering has run parallel to the expansion of hospital departments, the standardization of electrical safety inspections, and the implementation of TQM/CQI methods for equipment supervision. Practice has shown that electrical faults were only the tip of the iceberg—equally dangerous were operational non-conformities, calibration errors, lack of training, and failure to consider the life cycle of equipment. In response, risk assessment tools, program indicators, and a review of regulations and standards were introduced to allow clinical engineers to prioritize maintenance and training activities.

In this context, reviews by normative and regulatory agencies are important. Although specific sets of standards and regulatory structures are evolving, the very logic of the hierarchy of standards—from basic electrical safety requirements to electromagnetic compatibility requirements and product category-specific standards, and ultimately to clinical evaluations—sets the map of the terrain on which manufacturers and hospitals navigate. Consistent with this are methods for calculating device risk and creating program indicators that reflect both process efficiency and patient safety.

Medical instruments

The medical instruments and devices section focuses on signal quality, utilizing real-world clinical designs. Biopotential amplifiers, pressure and flow measurement systems, external and implantable defibrillators, stimulators, anesthesia devices, ventilators, and infusion pumps—all of these product classes transfer requirements from the sensing and analysis layer to the system layer: power supply, safety, isolation, control algorithms, and human-machine interface. The historical leap from hand tools to complex multimodal systems was made possible by the integration of electronics, materials science, computer science, and risk management.

For example, the design of a biopotential amplifier is not limited to increasing gain and reducing noise; it also involves optimizing the amplifier’s performance. It is necessary to guarantee high CMRR, resistance to motion artifacts and network interference, implementation of input filters without distorting the diagnostic band, and safe coupling with the patient. When such an amplifier becomes part of a neuromuscular stimulator or defibrillator, issues of pulse energy quality, electrode geometry and materials, synchronization with the heart rhythm, as well as arrhythmia detection logic and feedback sensors come into play. This integrative approach closes the loop with the earlier sections of the textbook.

Dental and materials science topics

Although our primary focus is on sensors and signals, biomedical engineering is inextricably linked to materials science and dental treatment planning. Dental implantology is an example where imaging (CBCT/CT, MRI in specific applications, intraoral US), sensors (measurement of implant stability and occlusal forces), signal analysis (healing monitoring), and materials (titanium alloys, bioceramics) must be treated as a unified system. Decisions about implant surface characteristics, bone condition, bed quality, and prosthetic loading are based on data whose reliability is built from the first contact of the sensor with the patient to the final clinical validation. The material and sensor frameworks in the compendium support this approach: the sections on hard and soft biomaterials are logically linked to the sections on sensing and imaging, emphasizing the interdisciplinary nature of therapy design.

Transport phenomena and biomimetic systems

The chapters on transport phenomena and biomimetic systems demonstrate that even the most advanced computational tools are ineffective without a precise description of the system’s physics. Diffusion, convection in microcirculation, thermal conductivity, and mass resistance in the arterial wall set the limits of detectability and interpretation for both signals and images. This is where we translate electronic parameters into biological parameters, including permeability, diffusion coefficients, and boundary conditions. This layer of theory supports the design of targeted therapies (e.g., drug delivery to the brain) and also informs the measurement limitations that must be considered during clinical validation.

In system practice, all the layers described must come together in validation. Clinical metrology includes installation qualification (IQ), operational qualification (OQ), and performance qualification (PQ) of devices, including electrical safety, EMC, accuracy, long-term stability, environmental resistance, and compliance with the clinical application profile tests. Sensors must undergo material and biocompatibility qualification, acquisition channels must undergo dynamic parameter verification, algorithms must undergo analytical and clinical validation with overfitting control, and imaging systems must undergo geometric and photometric correction. Clinical engineering organizes these activities into cycles of reviews and audits, combining risk analysis with documentation and user training to ensure effective management. This is why, in the structure of the manual, the sections on standards, program indicators, and technology management are an essential complement to the technical chapters.

The growing field of outpatient and home care is one in which the integration of sensing, connectivity, data compression, and clinical engineering translates into tangible health outcomes. Home-use devices must combine a user-friendly interface, autonomous safety control mechanisms, and remote transmission protocols to ensure optimal functionality. From an information chain perspective, it is crucial to ensure metrological consistency between the home and hospital settings so that decision-making algorithms do not lose their calibration due to environmental and usage differences. It is worth noting that the design of home equipment necessitates consideration of atypical usage profiles, which influence the selection of sensors, self-diagnostic algorithms, and alarm policies.

Data analysis and validation in biomedical engineering – summary

The integrated chain from photon to clinical decision is the practical architecture of modern biomedical engineering. Its first segment — sensors — determines the quality of information at the source. At the metal-electrolyte interface, in optical waveguides, or in enzymatic transduction systems, noise, drifts, and nonlinearities are present, which no algorithmic magic can later remove without information costs.

Signal acquisition and analysis give this information structure through adaptive filtering, time-frequency representations, higher-order methods, machine learning, and compression—always respecting the physiology of the signal and the limitations of the measurement path. Imaging provides a rich morphological and functional context that, when fused with temporal signals, creates a more complete picture of the patient. The whole must be embedded in clinical engineering, encompassing technology management, quality indicators, standards, and risk assessment — because only then does metrological precision translate to clinical safety.

In dental and medical practice, such integration translates into implantation planning, monitoring of healing, assessment of stability and functional load, and, in general medicine, into effective implantable therapies, ventilation, sedation, infusions, and vital monitoring, including in the patient’s home. The overriding conclusion is the need for systemic design, encompassing materials and sensors, electronics and software, as well as organizational and training processes. Without this perspective, it is challenging to discuss the actual translation of technology into clinical outcomes.

Bibliography

Bronzino, J.D. (ed.). The Biomedical Engineering Handbook. Second Edition. CRC Press, Boca Raton, 2000.